Sunday, June 22, 2014

Rotaries v Intersections, An Exercise in Analysis

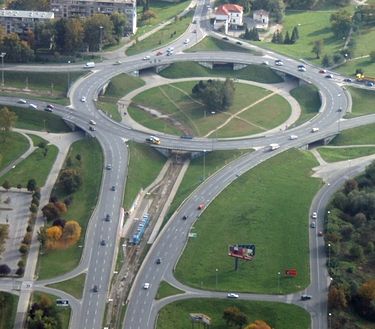

(Picture from here.)

This is the sort of thing my brain does. Which probably tells you more about me than you want to know. Indulge me. Next time I'll talk about space drives. I promise.

I live up here in Massachusetts. When I moved up here, back in the Cretaceous, I encountered something called a "rotary." It's also called a "roundabout", "circular intersection", "traffic circle" and other names. Some of which are even printable. They took a little getting used to but I managed. The problem with Mass drivers isn't rotaries; it's that they, and the state police, view traffic rules as mere suggestions or revenue sources. No one takes them seriously.

Then, in the 80s, there was a big push to get rid of rotaries. It is a certain truth in life that no intersection is so completely devoid of merit that a Massachusetts traffic engineer can't make it worse. Perfectly functional rotaries were replaced by obvious inventions of the devil. At left is a picture of what happened to the Route 2 Rotary in Cambridge. The black circle is where the rotary used to be. That mess is controlled by four barely functioning stoplights.

Get caught in this thing and kiss an hour of your life good-bye.

So, I'm driving into work today and not happy about it. To alleviate my boredom I took a back way in through Lincoln. The back way goes through a 5-points. This may be a term most people are not familiar with. It's a relic of my sordid youth in Alabama. A 5-points is a 5 way intersection. And, of course, there was little or no understanding by the drivers of who has which turn. I got through that and mused on intersections all the way in.

It came to me that intersections have an ascending complexity rule. It's an easy one to formulate. Let's consider the simplest intersection, a straight line with a stop sign. No cross streets.

Since the road is bi-directional, two drivers have to be considered. So each driver has exactly one choice. This is a complexity level of two. The next kind of intersection is three points. Each driver has to consider two choices. This gives us a rule of:

C = R * (R-1), where R is the number of roads coming into the intersection and C is the complexity level.

This number goes up fairly quickly. For R = 3, the C value is 6. For R = 4, the C value is 12 and for my favorite, the 5-points, the C value is 20. If you graphed this it would look like an ascending curve.

Rotaries have a different rule. Each entry at a rotary has, in effect, an R of 2. The choice is limited by 1) spreading out the intersections across the rotary and 2) determining that direction of travel in a rotary is one way. This turns the rotary into a series of R-2 intersections. The complexity of the entire rotary could be considered a sum of the R-2 intersections.

So, in this case, if we have a four way intersection, we have a complexity level of 8 as opposed to the R-4 straight intersection complexity level of 12.

Notice that while the curve for a linear intersection is an ascending curve, the increasing complexity of the rotary is linear. This means we can add complexity to a rotary with much less impact than adding the same complexity to a linear intersection. However, it also says that for a small intersection, the complexity of a linear intersection is less or equal to a rotary.

However, rotaries were often the victim of their own efficiency. One could get on a rotary faster than one could get off. This caused nightmarish congestion. The Brits came to our rescue and redesigned the rotary in the 1960s. The big change was the addition of a precedence rule: vehicles in the rotary have priority to those outside the rotary.

(This, by the way, was always the rule in Massachusetts but drivers often had difficulty understanding it. The problem driver precedence, I think, was one reason rotaries fell out of favor.)

But there is a hidden complexity in the rotary. The 4-way intersection is intended to be traversed one at a time. Time is not a factor except as measured by the impatience of other motorists.

In the rotary, however, vehicles are on the move. Time is a significant factor. The rotary has to be sized that a given vehicle has enough time to get on and off the rotary. Ideally, this is done without much slowing down.

If we take 30 mph as an ideal speed of traversal, that's 44 feet/second. Let's allow 3 seconds at a given intersection in the rotary just to have room. That's 132 feet between intersections. A simple two road rotary would have to be 264 feet in circumference or 84 feet in diameter.

I'm not sure how to evaluate this numerically. If 30 mph is the proper speed than as the size of the rotary increases or decreases, the complexity must increase or decrease. The number of choices remains the same but the time in which to make them is a variable.

When I was visiting my home town in Missouri I saw what must be the smallest rotary imaginable. It could not have been more than 20 feet in diameter. Two cars draped across the center would hang off both ends. This little thing had four roads coming into it. If you didn't hang hard on the steering wheel, you would drive off the road. But I digress.

This is the sort of analysis engineers do on all sorts of things. There's a reason that data going across a network is called "traffic." Many of the original network topologies use road metaphors. One of the famous problems of mathematics, solved by Euler in 1735, is how to determine the optimum path to traverse seven bridges in Konigsberg. That was the beginning of graph theory. Which was the start of that map program in your smart phone.

Which could help you navigate one of the Massachusetts rotaries.

Sunday, June 15, 2014

The Turing Test

Well, last time I spoke about how the Fermi Paradox irritated me. I'm on a curmudgeonly roll. Since it's in the news, let's go gunning for the Turing Test.

The Turing Test was invented, not surprisingly, by Alan Turing. It came from a paper entitled Computing Machinery and Intelligence and appeared in Mind in 1950. It was an attempt to determine whether machines could think without defining a "machine" or "think", since these were potentially ambiguous concepts. He wanted something that could get beyond this problem.

Turing proposed an "imitation game" where a judge must attempt to determine if a respondent is a human or a machine. To do this, the conversation between judge and candidate was made devoid of clues by using a teletype (remember, it was 1950). If the judge could not distinguish the machine's conversation from human, then the machine "won". If the judge could detect that the candidate was not human, the machine "lost."

Turing thought that if a computer could fool a human judge up it had shown itself sufficiently capable as to be considered "intelligent."

I don't have a problem with the Turing Test as long as we understand a few things about it:

- It is a very limited kind of intelligence that is being tested

- It is limited by the context of the test and the biases of the judges

- It does not imply anything about the humanity or experiential nature of the candidate.

- It plays into human biases in that it presumes that something that is capable of indistinguishable imitation of a human is as intelligent as a human.

Maybe. Maybe not. Remember it's still a dog. Dogs are not motivated the same as humans. Their sensory system is very different. We are sight animals. They are smell animals. That alone is going to make the conversation interesting.

But the Turing Test presupposes that a human response is the correct response regarding intelligence. Consider if the situation were reversed and the dogs were giving a Turing Test to the humans. Perhaps a question might be, "Are you smelling my excitement right now?" (One should think that dogs would make a Turing Test that they would be able to pass.) The human would be unlikely to answer correctly and the dog professor would say sadly these humans are just not as smart as we canines. We share a lot with dogs. An intelligent extraterrestrial or computer program is going to be much harder.

We live in the context of our humanity. We should expect other, non-human, intelligences to live in their own context. My point is that there are only two possible true successful systems that would pass a Turing Test. One is a program that is specifically designed to pass a Turing Test. It doesn't have to have any general intelligence. It's designed to show itself as intelligent in this very limited domain.

Which brings us to Eugene Goostman, the program that "won" the most recent Turing Test.

Eugene Goostman is a chatterbot-- a program specifically designed to hold conversations with humans. Goostman portrays itself as a 13 year old boy from Ukraine that doesn't understand English all that well. I've read the transcript of some of Goostman's conversations. I argue that without this context provided by Goostman, it would not have passed. Goostman's programmers gamed the test in my opinion.

But let's say a really clever program was designed from the ground up to hold meaningful conversations. Would it be intelligent? I don't think so. Intelligence is a tool that can be applied in many circumstances. Watson, the program that won the Jeopardy is a closer contender. It's intelligence won at the game. Not, the same system is being used in medical decisions for lung cancer.

An intelligent conversationalist would be one that donated its intelligence to the conversation. It might supply insight. Make connections. In short, do all the things we expect from a human conversation. It converses intelligently instead of having conversational intelligence. That is, its conversation derives from its intelligence. It's not just a smart program that's learned to fool us.

Which brings us to the second possible winner of a Turing Test-- a system (biological or otherwise) that is so smart it can model our context sufficiently that we would find it indistinguishable from a human being. Such a system would have to be more intelligent than a human being, not less.

But this all presumes the Turing Test has a purity it does not possess. Not only does the test only measure an extremely narrow view of intelligence-- behavioral conversation-- it presumes the judges are unbiased. As we saw with Goostman, this is not so. And it could never be so.

After all, humans imbue cats, dogs, insects, statues, rocks, cars and images on toast with human like qualities. We infer suspicion from inept sentence structure. We infer honesty when it's really spam. We infer love from typewritten conversation when it's really sexual predation. Put two dots over a curve and we inevitably see a face. Give us a minimum of conversational rigor and we inevitably determine that it's human.

Humans can tell what's legitimately human and what's not a lot of the time. But we don't do it from characters on a screen. We detect it from motion or facial expression. We detect it from tone of voice or contextual cues. We know when something that purports to be human, isn't, if we can bring our tools to bear on it.

For example, there's the uncanny valley. This is when an artificial visualization of a human being gets very close to presenting as human but not quite. People get uncomfortable. It happened with the animated figures in Polar Express. The characters animated on the train were just a little creepy. Exaggerated figures such as Hatsune Miku or the characters from Toy Story are fine-- they're clearly not human and don't trigger that reaction. Think of the characters in Monsters, Inc: monsters all, with the exception of Boo. But we were able to fill in any missing humanity they lacked. (The fact that the story was brilliant is beside the case.)

Alan Turing was a genius but, personally, I don't think the Turing Test is one of his best moments. It's an extremely blunt tool for measuring something that requires precision.

I invite the system under test to come with me to a family reunion with my in-laws. Navigating that is going to take some real intelligence.

Sunday, June 8, 2014

The Fermi Irritation

(I meant to upload this and thought I had. But I didn't. Oh, well. Sorry.)

I'm on my way home from work and I’m in a bad mood. So, I’m going to talk about something that regularly irritates me.

I'm on my way home from work and I’m in a bad mood. So, I’m going to talk about something that regularly irritates me.

Like Pap in Huckleberry Finn, "Whenever his liquor begun to work he most always went for the govment." I go for the Fermi Paradox.

Enrico Fermi came up with it. Essentially, it says: the

universe is unimaginably old. We arose. If we’re typical, surely in all that

time some other intelligent race has, too. Why don’t we see them?

The Paradox is rather a Rorschach test. It reflects more the

point of view of the person discussing the Fermi Paradox than the Paradox

itself.

The Fermi Paradox has been discussed over and over, both in

science and in the science fiction communities.

The science community came up with the Drake Equation, a way

of formulating the variables of the problem. This comes right out of Wikipedia:

where:

- N = the number of civilizations in our galaxy with which radio-communication might be possible (i.e. which are on our current past light cone);

- R* = the average rate of star formation in our galaxy

- fp = the fraction of those stars that have planets

- ne = the average number of planets that can potentially support life per star that has planets

- fl = the fraction of planets that could support life that actually develop life at some point

- fi = the fraction of planets with life that actually go on to develop intelligent life (civilizations)

- fc = the fraction of civilizations that develop a technology that releases detectable signs of their existence into space

- L = the length of time for which such civilizations release detectable signals into space

But that’s really only saying what would have to know in order to figure out the

probabilities. Without the content of the variables, we really know next to

nothing.

There have been several SF books to explain why we haven’t

heard from our neighbors. Personally, I like Larry Niven’s idea from World ofPtavvs. He suggested that intelligence was a naturally occurring phenomena. But

a few million years ago a violent telepathic race of enslavers beamed out a

massive command to commit suicide when they were about to be overwhelmed by a

slave uprising.

It's pretty quiet out there. No escaping that.

Many people who discuss this problem one of two camps:

- We have no evidence of them. Therefore, human beings are a singular event.

- We have no evidence of them so we should keep looking. Something is surely there.

Personally, I’m in the latter camp. Not because there is any

real hope of detecting them—I don’t think there is—but because if there was evidence we’d be damned fools not to

check.

There are a couple of problems with the paradox itself. For

one thing, it is instantaneous: it must always be analyzed in terms of the

known world at the time of analysis. We didn’t have an indication until just a

few years ago how many variant planets were in the Milky Way. If planets were a

rare event, we would expect planetary life to be an equally rare event. Now we

have a better understanding of planets—there are lots of them. The current estimate of earth like planets, at least

size and mass, is about 100 millions.

About that is spread out over the whole galaxy. And it doesn’t account for time.

Life has been on this planet for nearly four billion years.

But we didn’t have eukaryotic cells until only about 600-700 million years ago.

Invasion of the land was about 300 million or so years later. Mammals didn’t

get their start until 65 million years ago and our genus didn’t get started until about 2 million years ago. Humans

have been thinking for less than 250k years and we’ve only been in a position

to actually detect extraterrestrial

life for a little over a century.

Of those 100 gigaplanets, how many, like Mars, had their

life opportunities come and go? For all we know, Mars had a thriving

civilization about a billion years ago.

All evidence points to a relativistic universe. That is, we

are limited by the speed of light. Physical travel between the stars is

probably beyond us—or it may require us to give up our humanity. We might end

up striding across the galaxy as powerful as gods, but we’ll no longer have a human perspective.

A better detection mechanism is to detect some sort of

photon emission—radio, visible light, etc. We can signal each other like

candles in the dark.

But even that has a duration. The span of frequencies that

we manipulate is called the spectrum. As we’ve found over the years, broad use of the spectrum is wasteful.

Consequently, the radio spectrum is cut up like fine cheese. In addition, not

all parts of the spectrum are created equal. Some portions—radio, for example—are particularly nice for wireless communication. Visible light is very nice for

carrying signal but it doesn’t broadcast very well. You can communicate with a

laser but you need to aim it particularly well—or push it down a pipe like

optic fiber. Scarcity and demand determine price and spectrum has gotten

expensive. It's not going to get any cheaper.

I expect that we (and by extension of our sample size of

one, everybody else) will refine our use of spectrum away from broadcasts that can be picked up by our neighbors and

direct more where it will be useful. That’s going to reduce our stellar

footprint. In addition, we are already getting farm more efficient in how much

signal we actually use. Some of our satellites barely put out more watts than a

cell phone, relying instead on better receivers. Detect that Antares!

And that is presuming a detectable civilization (i.e., us.)

even survives.

That, I think, is the biggest problem of Group 1 of the

Fermi Paradox. They are inherently optimistic that in the broad expanse of time

a single group must have survived long enough for us to detect them. Their absence

must indicate our singular existence.

Think back on our own evolutionary history. Life was here

for nearly four billion years before

we could detect or be detected. If, say, humans lasted a million years, we

could miss talking with our neighbors four thousand times by being out of sync

just a little bit. They started their climb a million years after us—we miss. A

million years before—we miss. And that presumes a million year life span for

human beings. It doesn't take into account that evolution is always going on. We have no idea what Homo sapiens will evolve into. Only that in a million years we won't be Homo sapiens.

There might be gods out there—species that were so stable

and intelligent that they have lasted across the time of our ascent. But they will have a

god’s perspective. Which means that there is no determining whether they would

want to or be able to detect us or if we would be able to detect them. If they

created a supernova to send a message over to their fellows in Andromeda, we’d never recognize

it as other than random

noise. Unless, of course, they took an interest in us. They just made a movie

about the last time we interested a deity overmuch. It’s called Noah.

But I don’t think there are gods out there. I suspect if we

have neighbors they're pretty much like us: fumbling our way into a greater universe

with the meager tools evolution gave us. Putting out enough radio waves to show

themselves but too far away or out of synch to be seen.

We’re probably not alone out here. But we may as well be.

Subscribe to:

Posts (Atom)